Voice technology is revolutionizing how we interact with digital platforms. With Gen Z users increasingly preferring voice commands over traditional text input, the shift toward voice-first experiences has never been more critical. Enter VocRT - an open-source, comprehensive Voice-to-Voice AI solution that's changing the game for developers, businesses, and educational institutions worldwide.

What Makes VocRT Different?

Unlike traditional chatbots that rely on text-based interactions, VocRT offers ultra-low latency voice-to-voice conversion with seamless interruption handling. This means your users can have natural, flowing conversations with AI - just like talking to a human.

Key Features That Set VocRT Apart:

Real-Time Processing

Experience conversations with minimal delay, creating natural dialogue flows that keep users engaged.

Complete Privacy

All processing happens locally on your machine - no data ever leaves your environment, ensuring 100% privacy compliance.

Advanced RAG Capabilities

Seamlessly integrate unlimited PDFs, documents, spreadsheets, presentations, and web content—enabling intelligent, context-aware AI conversations.

Zero API Costs

No recurring charges or usage limits - once installed, VocRT runs completely offline.

Quick Installation Guide

Prerequisites

- Python 3.10 (required)

- Node.js 16+ and npm

- Docker for Qdrant vector database

- Git for cloning repositories

1. Clone the Repository

git clone https://huggingface.co/anuragsingh922/VocRT

cd VocRT2. Set Up Python Environment

python3.10 -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

pip install -r requirements.txt3. Install eSpeak

Ubuntu/Debian

sudo apt-get update

sudo apt-get install espeakmacOS

Install Homebrew if not already present, then install eSpeak:

# Install Homebrew if not present

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

# Install eSpeak

brew install espeakWindows

1. Download from the eSpeak official website

2. Run the installer and follow the setup instructions

3. Add the installation path to your system PATH environment variable

Verification

Test your eSpeak installation with the following commands:

# Check eSpeak version

espeak --version

# Test eSpeak functionality

espeak "VocRT installation successful!"4. Launch Backend Services

cd backend

npm install

npm run dev5. Start Frontend

cd frontend

npm install

npm run dev6. Initialize Qdrant Database

docker run -p 6333:6333 -p 6334:6334 \

-v "$(pwd)/qdrant_storage:/qdrant/storage:z" \

qdrant/qdrantAccess Points

http://localhost:6333http://localhost:6333/dashboardhttp://localhost:6334Once the container is running, you can access these endpoints to interact with your Qdrant database.

7. Configure Environment

Edit your .env file with your preferred LLM provider:

# LLM Configuration

OPENAI_API_KEY=your_openai_api_key_here

GEMINI_API_KEY=your_gemini_api_key_here

LLM_PROVIDER=google # or 'google' for Gemini

LLM_MODEL=gemini-2.0-flash # or your preferred model7. Download Required Models

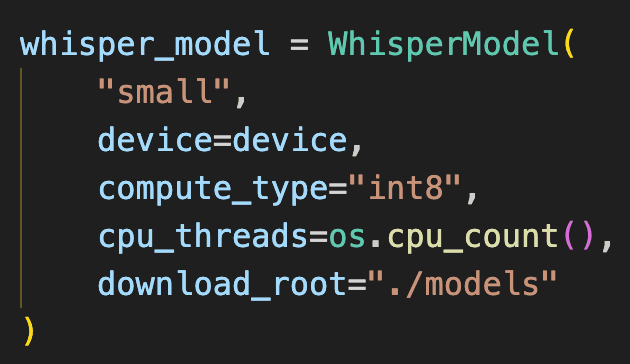

Whisper STT Model

The Whisper model will be automatically downloaded when you first run the application. Simply specify your preferred model size in app.py:

Model Size Recommendations:

- tiny: Fastest processing, lower accuracy (~39 MB)

- base: Balanced performance and accuracy (~74 MB)

- small: Better accuracy, moderate speed (~244 MB)

- medium: High accuracy, slower processing (~769 MB)

- large: Highest accuracy, slowest processing (~1550 MB)

9. Launch VocRT

python3 app.pyNavigate to http://localhost:3000 and start your first voice conversation!

Real-World Applications

Customer Support Revolution

Transform your customer service with AI agents that understand context, remember previous interactions, and provide human-like responses. VocRT's interruption handling means customers can ask follow-up questions naturally.

Educational Enhancement

Create interactive learning experiences where students can ask questions verbally and receive immediate, contextual responses based on course materials you've uploaded.

Accessibility Solutions

Empower users with visual impairments or mobility challenges to interact with your platform through natural voice commands.

Healthcare Innovation

Develop secure, HIPAA-compliant voice interfaces for patient interactions, appointment scheduling, and medical information retrieval.

Performance Optimization Tips

Hardware Recommendations

Configuration Best Practices

What's Next?

VocRT represents just the beginning of voice-first AI interactions. With over 90 combined downloads across Hugging Face and Docker platforms, the community is rapidly growing.

The upcoming VocRT 3.0 promises even more groundbreaking features, including enhanced multilingual support, improved voice synthesis quality, and advanced conversation management.

Join the Voice AI Revolution

Ready to integrate cutting-edge voice AI into your project? VocRT's MIT license and comprehensive documentation make it the perfect choice for developers who want full control over their voice AI implementation.

Transform your user experience with VocRT - where privacy meets performance in real-time voice AI.